Published September 2025 as part of the proceedings of the first Alpaca conference on Algorithmic Patterns in the Creative Arts, according to the Creative Commons Attribution license. Copyright remains with the authors.

doi:10.5281/zenodo.17084388

I’m a visual artist and computer programmer. My work varies between installation artworks and live performances with a musician/band. I’m interested in visuals made up of repeated 2D and 3D objects, and the interplay between them. For my live work, I spend time listening to the music in advance and designing visuals that I think tell a story. I then interact with the running code during the performance.

The visuals I create tend to have a lot of structure: things composed of other things. There are lots of markup/data languages that are great at describing this, like HTML or SVG. Consider the example in figure 1, taken from the SVG specification. One can immediately understand that the stroke styling attributes apply to the two lines, and that these are together rotated and then translated – this structure is inherent in the language.

<svg width="400px" height="120px" version="1.1">

<g transform="translate(50,30)">

<g transform="rotate(30)">

<g fill="none" stroke="red" stroke-width="3" >

<line x1="0" y1="0" x2="50" y2="0" />

<line x1="0" y1="0" x2="0" y2="50" />

</g>

</g>

</g>

</svg>Figure 1: a small example of Scalable Vector Graphics.

However, what SVG lacks is a mechanism for abstracting this structure: I am unable to explain that I now want 100 lines placed at random. It also lacks an easy way to describe animation: I have to either clumsily encode this as complex, special-purpose tags or graft chunks of JavaScript into the markup.

While programming languages make those things easy, they make describing structure surprisingly hard. Take the hugely popular p5.js1, for example. Figure 2 shows an introductory example from the p5.js website.

function setup() {

createCanvas(200, 200, WEBGL);

}

function draw() {

background(220);

noStroke();

lights();

orbitControl();

push();

translate(-50, 0, -100);

fill('red');

sphere(50);

pop();

push();

translate(0, -100, -300);

fill('green');

sphere(50);

pop();

push();

translate(25, 25, 50);

fill('blue');

sphere(50);

pop();

}Figure 2: a short Processing sketch.

Grouping is achieved in p5.js with push() and

pop() operations. Keeping track of the nesting of these

operations requires careful studying of the code. Take the blank lines

out of this example and any visible structure disappears altogether.

I’ve been programming in Python for a hilariously long time and it tends to be the first hammer I reach for. It’s pretty common to see code for creating data structures in Python like that shown in figure 3, which is a fragment of code using the Panda3D2 framework.

group = self.render.attachNewNode('group')

group.setPos(100, 100, 100)

sphere1 = loader.loadModel('sphere.obj')

sphere1.setScale(10)

sphere1.setPos(-20, 20, 0)

sphere1.reparentTo(group)

sphere2 = loader.loadModel('sphere.obj')

sphere2.setScale(20)

sphere2.setPos(25, 5, 10)

sphere2.reparentTo(group)Figure 3: Python code using the Panda3D framework.

As with the push()/pop() of p5.js, we’re

left to create the structure of the scene graph by hand: the two spheres

are inside the group, but this is only apparent from careful inspection

of the method calls. This is a shame, because Python is otherwise a very

good language for surfacing context. Consider the example in figure 4 of

opening and writing to a file in Python.

with open('output.txt', 'w') as output:

output.write("Hello ")

output.writeln("world!")Figure 4: Python file handling with with.

Even without being a Python programmer, one could probably

guess that the output variable represents the open file,

that it is available within the two indented lines and closed

afterwards. The with statement in Python manages

context. Excitingly, there is a standard protocol for creating

your own objects that work this way. By designing objects that implement

the context management protocol to push themselves onto a “current

parent” stack, and then using that when we create new objects, we could

use with statements to structure data.

with Group(position=(100, 100, 100)):

Sphere(scale=10, position=(-20, 20, 0))

Sphere(scale=20, position=(25, 5, 10))Figure 5: (ab)using with to create structured

data.

Consider the code in figure 5. In this imagined framework, a

Group object is created and it sets itself, via the

with statement, as the current parent. The two

Sphere objects then automatically add themselves as

children of the Group object. This immediately feels like

SVG, but has the advantage that we can work programmatically. Want 100

random spheres instead? Use a for loop and variable

parameters, as in figure 6.

with Group(position=(100, 100, 100)):

for i in range(100):

r = uniform("radius", i) * 20

x = (uniform("x", i) - 0.5) * 200

y = (uniform("y", i) - 0.5) * 200

z = (uniform("z", i) - 0.5) * 200

Sphere(scale=r, position=(x, y, z))Figure 6: using code to abstract the construction of structured data.

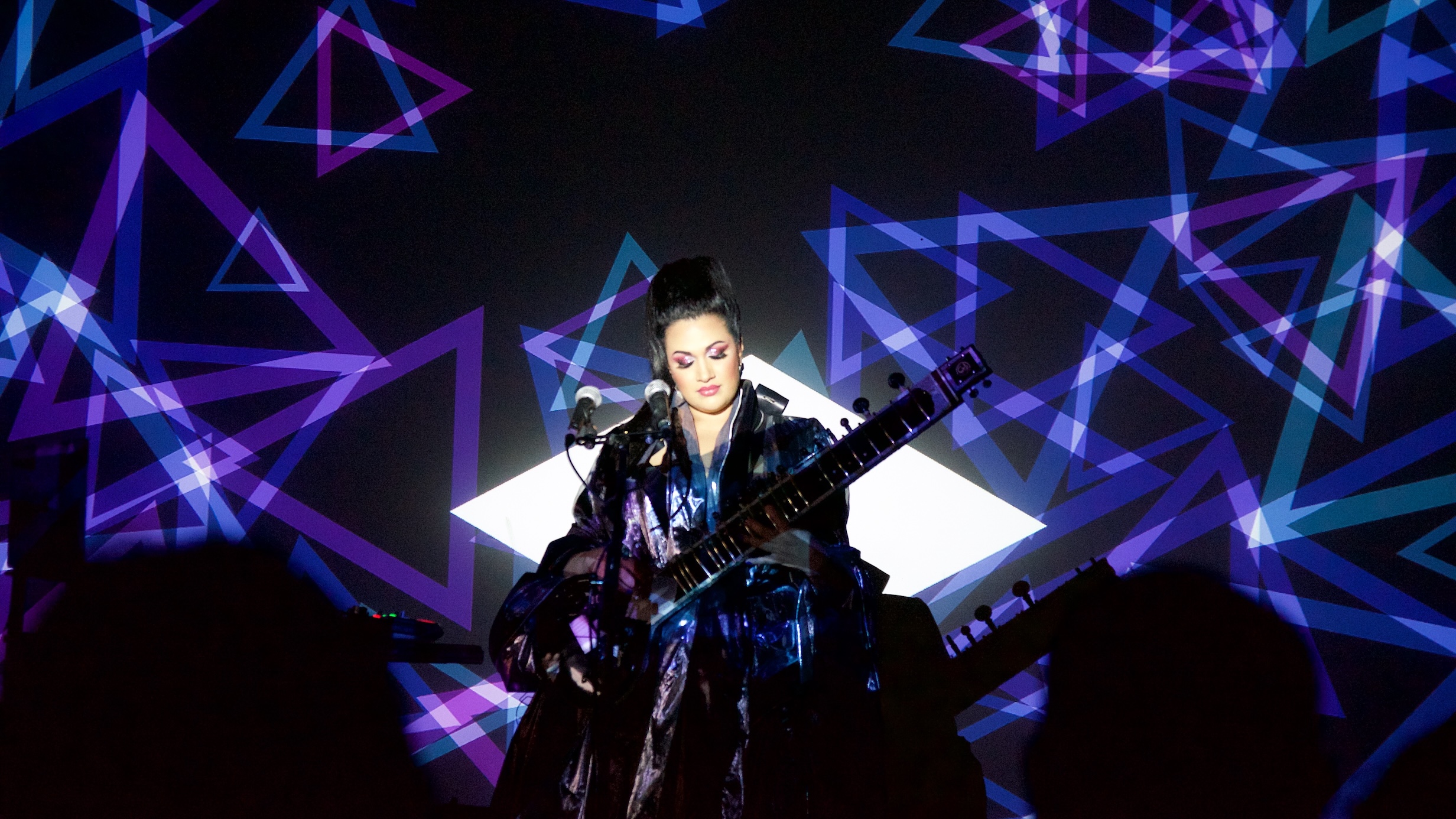

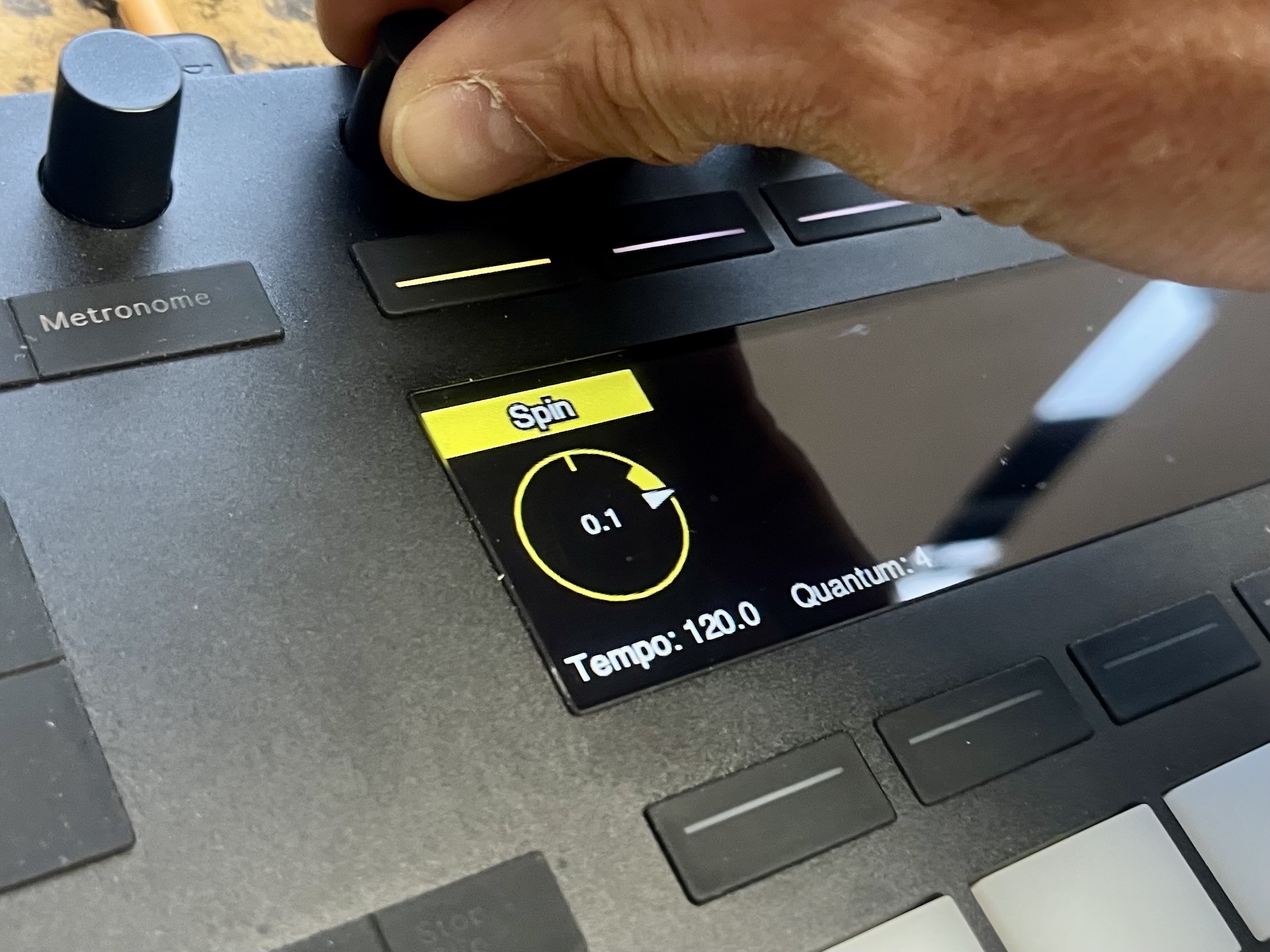

In fact, I built a complete 2D animation framework using this approach in 2019 for a live show with BISHI3. That framework worked on the principle of declaring a tree of renderable objects and then animating their attributes. For the show, I worked with a fixed set of triangles and designed different patterns of movement and colour for each song, as can be seen in figure 7.

In 2021 I was invited to repeat this show for BISHI at the Southbank

Centre. Rather than do the sensible thing of just re-using the code from

last time, I decided to rethink my solution. I liked the

with-as-structure approach, but many parts of the embedding

in Python felt very awkward. Being a recovering Haskell programmer,

there is no problem to me that a domain-specific language doesn’t look

like the solution to…

The result of a furious period of reworking this performance from scratch was the first version of Flitter, a language for constructing structured data, combined with an engine for rendering this structured data as – originally 2D – visuals. The system has developed significantly since 2021, so I’m going to describe it as it exists now.

Flitter follows pretty closely to my original Python embedding, including borrowing Python’s indentation-based blocks. The main difference is that the language is functional and has specific syntax for constructing trees. Figure 8 shows the imaginary example from figure 6 translated into Flitter code.

!window size=1280;720

!canvas3d samples=4 viewpoint=200

!light color=1 direction=-1

!transform translate=100

!material color=1

for i in ..100

let r=uniform(:r)[i] * 20

pos=(uniform(:pos;i)[..3] - 0.5) * 200

!sphere size=r position=posFigure 8: a short Flitter example.

Here’s an explanation of some of the syntax to make sense of the example:

:symbols, and tree nodes; is the in-line vector concatenation operator!name creates a new tree node of kind

namename= after a node sets the name attribute of

the node.. creates half-open range vectors (start defaults to

0)for iterates over a vector evaluating the indented

block and concatenates the resultslet introduces names scoped to the end of the current

blockuniform() is a function that takes a seed vector and

returns an infinite vector of pseudorandoms[i] indexes a vectorMaking the root datatype of the language be a vector was based on my

experiences of using NumPy4. It is incredibly useful

to be able to do operations on arbitrary-sized arrays. In this example,

uniform(:pos;i) creates a unique infinite vector of

pseudorandom values per sphere, [..3] takes the first three

values and then the subtract and multiply apply to all three at once. In

general, short vectors are repeated out to the expected length – this

includes the viewpoint, direction,

translate and color attributes in the example,

which all expect 3-vectors.

A functional language works very well for describing tree structures:

the code is itself a tree of expressions – “it’s turtles all the way

down”. The Flitter language is purely functional5,

lacking any direct output capability – it is only used to

describe structured data. The Flitter engine passes this data to

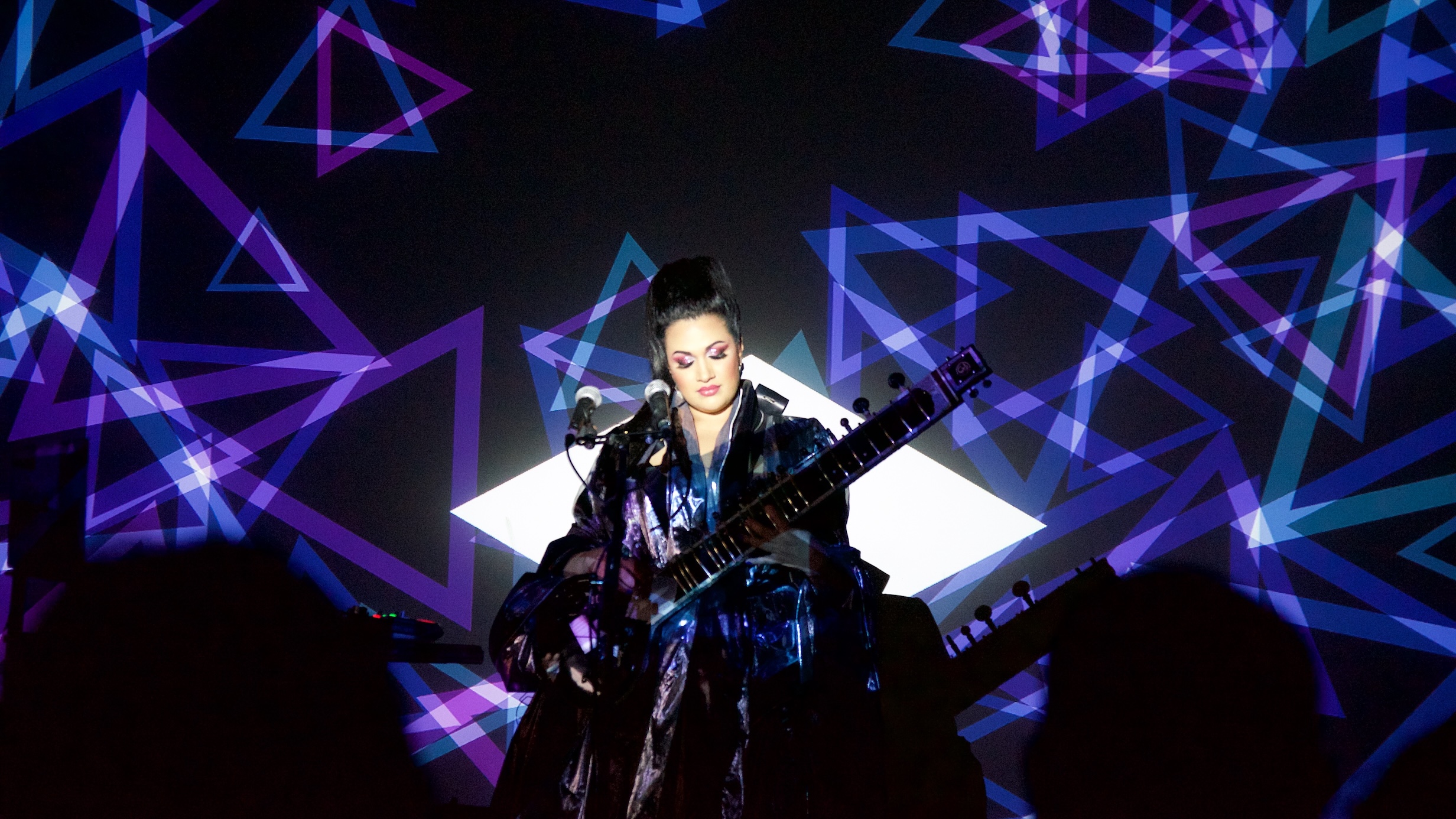

renderers that translate it into visuals. The result of running this

example in the Flitter engine is shown in figure 9. It will always be

exactly this image because the uniform() function is

deterministic. In fact, it is this image at 60 frames per second as the

engine runs a continous read-evaluate-render loop.

Animation is achieved by varying the data tree over time. For

example, the time global name evaluates to the current

frame time in seconds, adding a rotate=time/10 attribute to

the !transform node will result in the arrangement of

spheres spinning around in space (on all three axes), as shown in figure

10.

Figure 10: an animated version of the code from figure 8.

The lighting in this animation is fixed to the frame of reference of

the viewer rather than the spheres. In Flitter this is obvious from

looking at the code. To instead fix the light to the spheres’ frame of

reference, one would just move the !light node to be

indented inside the !transform node, as shown in figure 11.

Using Python and Panda3D, I have to read the code for a light to

understand how it is attached in the scene graph; in Flitter, I look to

see where the code is.

!window size=1280;720

!canvas3d samples=4 viewpoint=200

!transform translate=100 rotate=time/10

!light color=1 direction=-1

!material color=1

for i in ..100

let r=uniform(:r)[i] * 20

pos=(uniform(:pos;i)[..3] - 0.5) * 200

!sphere size=r position=posFigure 11: a spinning example with lighting direction fixed to the spheres instead of the viewer.

I find this indentation-based-structure approach a fluid way to develop. I often work “outwards”, writing and testing fragments of code and then iteratively indenting them and pre-pending additional structure, e.g., adding transformations, loops and filters. However, this may just be decades of developing in Python (and Haskell before that).

In some ways, Flitter is more like a text-based modelling tool than a general purpose programming language. The structure of the 3D renderer compares to the OpenSCAD solid modelling language6. The main difference is that the Flitter language is designed for continuous change and is agnostic about what it is describing. Different renderers can interpret the structured data in wildly different ways: I’ve written renderers that control DMX stage lighting, LED strips and steerable RGB lasers. In this respect, the language might be closer to JSX7, which mixes JavaScript and XML to generate DOM nodes for rendering in a browser.

XSLT8 takes the data/programming language overlap in the opposite direction to JSX, creating a complete language in XML tags. Although designed for transforming XML, one could use it to create new XML instead. Probably the closest comparable language to Flitter that I’m aware of is YS9 which extends the data serialisation language YAML10 to be a full functional scripting language. However, both of these languages suffer in expressiveness of syntax by maintaining compatibility with the underlying data format.

There is no setup()/draw() differentiation

in Flitter: it’s either all setup() or all

draw() depending on how you look at it. Conceptually, the

renderer is told to render a new window, containing a new 3D canvas, on

each frame. In fact, a single window and all of the various OpenGL

framebuffers and shader programs are retained and reused. A single

sphere mesh model is constructed on first use and the 3D renderer

collects all 100 instances of it together and dispatches them

simultaneously to the GPU with the lighting configuration.

However, the engine checks for changes to the source text on each

frame, allowing interactive programming. Changing the size

attribute of the !window node will immediately resize the

window and all of the framebuffers. Changing the samples

attribute, which controls OpenGL multi-sample anti-aliasing, will

discard and recreate the depth and colour render buffers.

A good example of this declarative approach is videos. A

!video node introduces a video into the compositing tree.

This takes a position attribute specifying what frame to

render. A video is played by just varying this value. The code in figure

12 cross-fades between two videos, with the first starting at 2 seconds

in and the second playing at half speed. The engine opens the files on

demand and seeks to the correct location, then advances at the correct

rate, reading frames and compositing them, and finally closes the files

when they are no longer in use.

Flitter is designed to let me focus on what I want to see on screen instead of how to achieve that.

%pragma tempo 60

!window size=1920;1080

if beat < 10

!video filename='videoA.mp4' position=2+beat

if beat >= 5

!video filename='videoB.mp4' position=(beat-5)/2 alpha=linear((beat-5)/5)Figure 12: a video-player example.

Flitter was designed from the start for my live performances. However, I only edit the code during a performance if something has gone horribly wrong. When I did visuals for BISHI in 2019, I actually pre-scripted everything and just pressed play at the right moments. For the 2021 performance I wanted to push myself to trigger all of the individual visual elements live and continually adjust parameters controlling their movement and colour.

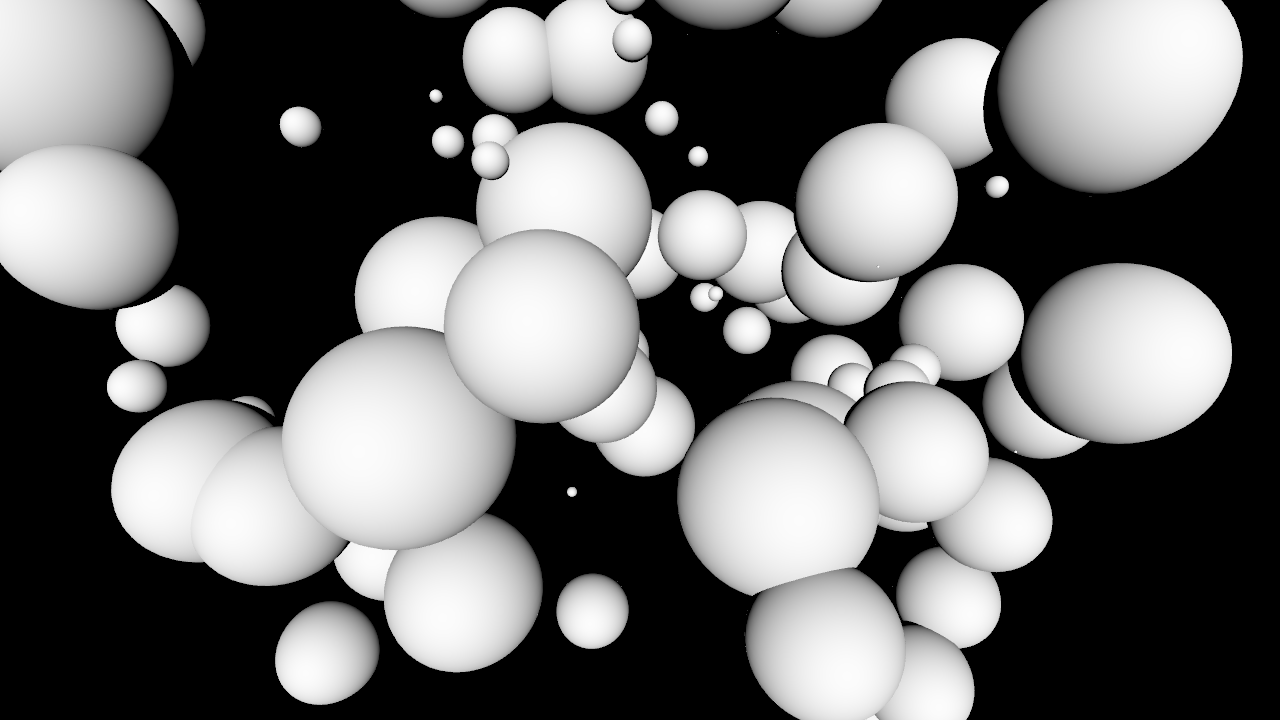

I felt my original software’s web-based interface would be too clunky for this, so the other motivation for the rewrite was to allow me to use a MIDI controller. Since this first version, Flitter has supported the Ableton Push 2 (since superceded by the Push 311), which has a screen, a touch-sensitive slider, 11 encoders, 64 pressure-sensitive pads and an assortment of buttons. I have no idea how to use it to make music, but I know how to talk to it because Ableton has helpfully documented that12. This makes it a really interesting general-purpose controller13.

The configuration is itself done in Flitter code. Figure 13 shows a very simple setup involving configuring the first encoder as a continuous value in turns. The current value and label are shown on the Push screen, as can be seen in figure 14. As the configuration is all Flitter code, it can take full advantage of the language – for instance configuring an array of pressure pads with a loop – and this configuration can change in response to events – such as touching a pad to activate new encoders.

!controller driver=:push2

!rotary id=1 color=1;1;0 style=:continuous label='Spin' state=:spinFigure 13: Flitter code for configuring a single Push 2 encoder.

Values are communicated from the controller back to the running

program through a persistent state mapping. The

state=:spin attribute on !rotary specifies a

mapping key to use. Within a Flitter program, the $

operator looks up the value of a key. We could alter the spheres code to

use the encoder value for the rotation transformation, as shown in

figure 15, so that spinning the encoder will spin the visuals.

!window size=1280;720

!canvas3d samples=4 viewpoint=200

!transform translate=100 rotate=$:spin

!light color=1 direction=-1

!material color=1

for i in ..100

let r=uniform(:r)[i] * 20

pos=(uniform(:pos;i)[..3] - 0.5) * 200

!sphere size=r position=posFigure 15: the spheres example, but with the rotation controlled by the encoder.

I’ve found using a MIDI controller is the sweet spot for my live work: I interactively code a palette of patterns and effects in advance, and then explore that palette during the performance with physical controls. I’ve found that I discover more interesting combinations of parameters with buttons and knobs than I do editing numbers. My experiences here parallel the parameterized-Rust visuals of Jessica Stringham14. Although I’ve made my renderers much more general-purpose and pushed all of the pattern design into the configuration language itself, the way we use a MIDI controller is almost identical.

I’ve found that Flitter works well for succinctly describing interesting algorithmic patterns, such as the example shown in figures 16 and 17, which demonstrates using a bloom filter, orthographic 3D rendering, fog, ordered transparency and multiple moving lights, plus compound loops and the built-in functions for Beta(2,2) distribution pseudorandoms and OpenSimplex 2S noise15.

%pragma tempo 60

let N=25

NLIGHTS=10

SCALE=3

!window size=1080

!bloom radius=15

!canvas3d orthographic=true samples=4 viewpoint=100 width=175 fog_max=300

for i in ..NLIGHTS

let axis=normalize(uniform(:axis;i)[..3]-0.5)

color=hsv(i/NLIGHTS;1;1)

period=15+30*beta(:period)[i]

phase=uniform(:phase)[i]

!transform matrix=point_towards(axis, 0;1;0) rotate_z=beat/period+phase

!light color=color*5k position=150;0;0

!material color=0 roughness=0.25

!transform scale=100/N

for x in ..N, y in ..N, z in ..N

let n=noise(:n, SCALE*x/N, SCALE*y/N, SCALE*z/N, beat/10)

if n > 0

!box position=(x;y;z)-N/2 size=0.75 transparency=1-nFigure 16: a more complete Flitter animation example.

Figure 17: the code in figure 16 running.

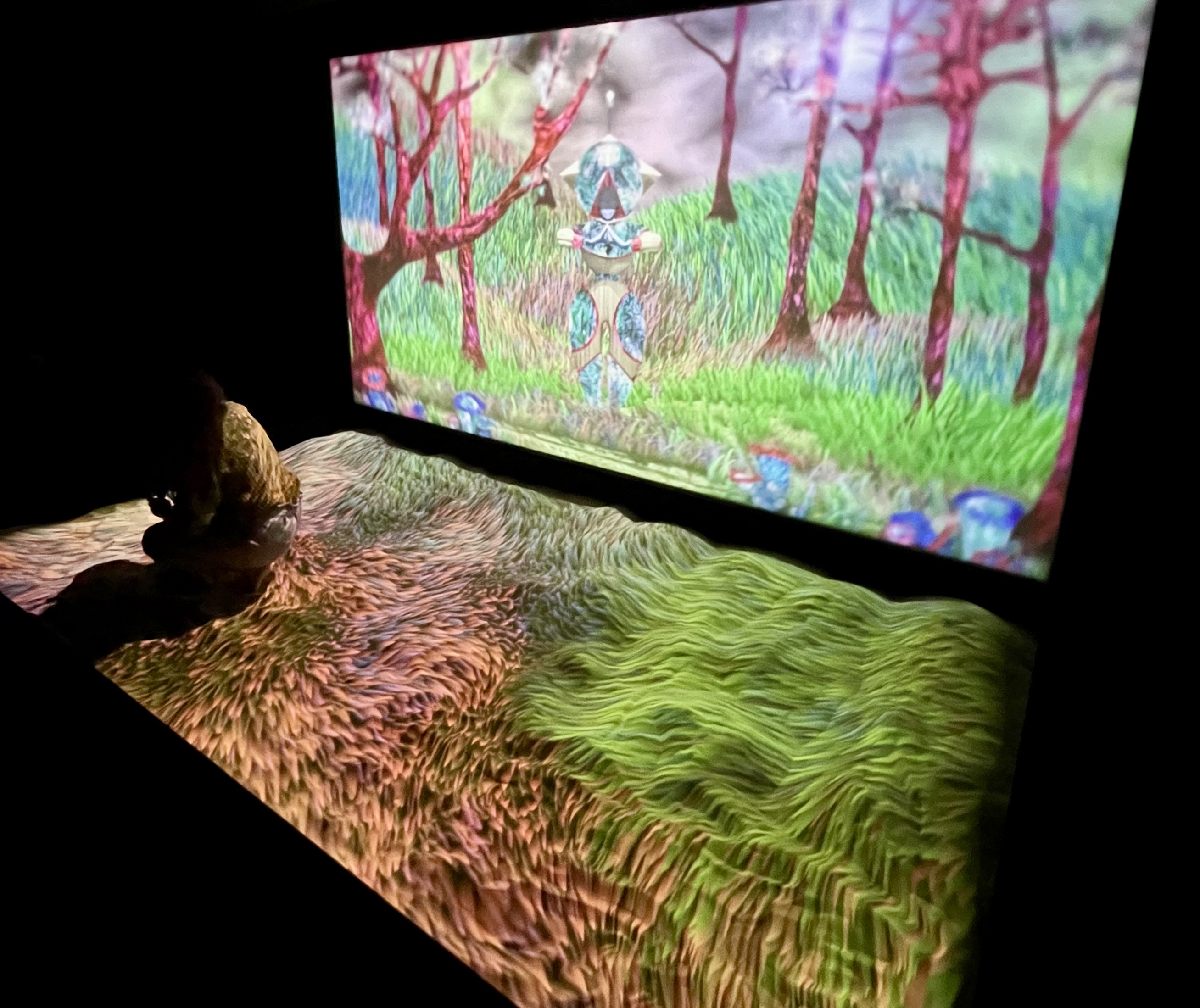

Despite being designed for performance work, I’ve now used Flitter in three different public installation artworks. “Subterranean Elevator”16, created with artist Di Mainstone, is a long-term interactive gallery show. This runs over two projections and includes a synchronised video, a multi-channel soundscape and audience interactivity via a depth camera. Figure 18 shows an interactive grass simulation from the first chapter of the 20 minute looping installation. The piece has over a thousand lines of Flitter code and runs unattended all day, which is a significantly different use for Flitter than I’d expected.

As well as the features already shown, the Flitter language has a module system, comprehensions, higher-order functions and closures. It compiles to an internal virtual machine and has a comprehensive partial-evaluator for optimising static code. It is available as a Python package and command-line tool that can be installed straight from PyPI17 on macOS, Windows and Linux. The source code is all on GitHub18 with a permissive 2-clause BSD license. The language and most of the rendering system is documented19 and there is also a separate examples repository20.

Although at times it can feel limiting, I’ve found separating and simplifying the description of visuals from the complexity of their rendering to be a powerful tool for pushing me to focus on design instead of implementation.

“p5.js library”, p5.js Contributors and the Processing Foundation, https://p5js.org↩︎

“Panda3D: Open Source Framework for 3D Rendering & Games”, Carnegie Mellon University, https://www.panda3d.org↩︎

“BISHI: artist, composer and producer”, https://www.bishi.co.uk↩︎

“NumPy: The fundamental package for scientific computing with Python”, NumPy Team, https://numpy.org↩︎

“Purely functional programming”, Wikipedia, https://en.wikipedia.org/wiki/Purely_functional_programming↩︎

“OpenSCAD: The Programmers Solid 3D CAD Modeller”, https://openscad.org↩︎

“JSX: Draft / August 4, 2022”, https://facebook.github.io/jsx/↩︎

“XSL Transformations (XSLT) Version 3.0”, W3C, https://www.w3.org/TR/xslt-30/↩︎

“YS: YAML Done Wisely”, Ingy döt Net, https://yamlscript.org↩︎

“The Official YAML Web Site”, https://yaml.org↩︎

“Push - a standalone expressive instrument”, Ableton, https://www.ableton.com/en/push/↩︎

“Ableton Push 2 MIDI and Display Interface Manual”, Ableton, https://github.com/Ableton/push-interface↩︎

Full disclosure: Ableton gave me an artist discount on my Push – I’m not sure they knew I had no intention of using or promoting their software.↩︎

Jessica Stringham, “Parameterizing Patterns for Generative Art and Live Coding”, Algorithmic Pattern Salon 2023, https://alpaca.pubpub.org/pub/dpdnf8lw↩︎

“OpenSimplex2: Successors to OpenSimplex Noise, plus updated OpenSimplex”, Kurt Spencer, https://github.com/KdotJPG/OpenSimplex2↩︎

“Subterranean Elevator” exhibition at Williamson Gallery, Birkenhead, 12th February – December 2025, https://williamsonartgallery.org/event/subterranean-elevator/↩︎

flitter-lang package on PyPI,

https://pypi.org/project/flitter-lang/↩︎

“Flitter: A functional programming language and declarative system for describing 2D and 3D visuals”, Jonathan Hogg, https://github.com/jonathanhogg/flitter↩︎

“Flitter documentation”, Jonathan Hogg, https://flitter.readthedocs.io/↩︎

“Flitter examples”, Jonathan Hogg, https://github.com/jonathanhogg/flitter-examples↩︎